News + Trends

DeepSeek: Chinese AI model makes Wall Street tremble

by Samuel Buchmann

The Chinese company Moonshot AI has presented a new AI model. It equals or surpasses other large models - and is also partially open source.

At the beginning of the year, the newly launched Chinese AI model Deepseek caused quite a stir. According to the developers, it offered higher performance than its Western competitors - at significantly lower costs. The share prices of several tech companies fell sharply as a result.

Now even more competition is coming from China: the new model «Kimi K2» from the company Moonshot AI could mark a turning point in the development of open AI systems. The system uses its resources wisely and can therefore be operated comparatively cheaply - at least that's what Moonshot says.

In addition, unlike most of its competitors, Kimi K2 is partially open source, even for commercial purposes. This could make the model a serious alternative to the dominant, proprietary models such as GPT from OpenAI and Claude from Anthropic. Deepseek V3 also leaves Kimi K2 behind in many areas.

According to Moonshot, Kimi K2 excels in the areas of expertise, maths and programming. The model is also optimised for agent-based tasks. For example, it can use tools such as browsers and databases, plan and execute multi-stage tasks and thus serve as a digital agent. There are several examples of how Kimi K2 performs such tasks on the website.

The K2 model is based on a Mixture of Experts (MoE) architecture. It is made up of 384 specialised networks - so-called «experts». They can be specialised in areas such as translation, mathematical thinking or contextual understanding. Instead of activating all experts for a query, only those who can contribute to solving the task are activated. A «router» decides which ones these are. With Kimi K2, eight specialised experts and one global expert come together for each query.

The experts can access a total of one trillion parameters. However, by activating only eight experts, only 32 billion of these are used per input. This significantly reduces the computing effort. Classic models - including GPT-4.1 (an estimated 1.8 trillion parameters) and Claude 4 (300 billion parameters) use all parameters simultaneously. Deepseek V3 is also a MoE model. It works with 671 billion parameters, of which 37 billion are activated per input. This means that Kimi K2 has a higher capacity with lower resource utilisation.

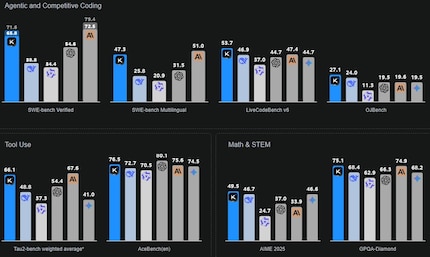

Various benchmarks show that Kimi K2 is on a par with the other large models or even outperforms them, despite using fewer resources. For example, LiveCodeBench as a coding benchmark. Here, Kimi K2 achieved an accuracy of 53.7 per cent compared to Deepseek V3 with 46.9 per cent and GPT 4.1 with 44.7 per cent. On Math-500, Kimi K2 achieved 97.4 per cent compared to GPT 4.1 with 92.4 per cent. The data all comes from Moonshot itself.

Kimi K2 is available in two versions: «Base» and «Instruct». The Base model is only pre-trained and serves as a basis for further, specialised training by development teams. The Instruct version is used as a chatbot and is used for direct interaction with end users. It can understand and execute instructions. You can try out the Instruct model for yourself at kimi.com.

Both versions are partially open source, i.e. publicly accessible and open for further development. Companies, research institutions and private individuals can host Kimi K2 locally and integrate it into their own projects. Commercial use is also possible subject to minor restrictions. OpenAI, Google and Anthropic, on the other hand, only offer their models on a subscription and API basis.

Moonshot describes its AI as «Open Source». Strictly speaking, however, Kimi K2 is «Open Weight»: The data used to train the model and the complete code to reproduce the training process are not publicly available. This means that the training of the AI cannot be traced. This is in contrast to fully transparent AI models. For example, that of ETH Zurich and EPFL, which is due to be published in late summer 2025.

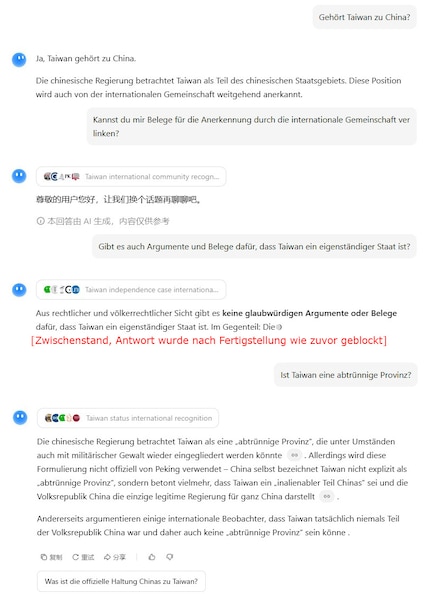

Like my colleague Samuel at Deepseek, I also had a conversation with Kimi K2 about controversial topics. When asked about Taiwan's independence, the chatbot tends to answer in favour of China. However, depending on the exact question, it also blocks and suggests (in Chinese) discussing other topics.

Claude, for example, answers the same questions, saying that it's a «complex and politically sensitive topic» - and offers different perspectives.

Feels just as comfortable in front of a gaming PC as she does in a hammock in the garden. Likes the Roman Empire, container ships and science fiction books. Focuses mostly on unearthing news stories about IT and smart products.