Background information

Meta under pressure – Part 2: how Instagram lost its way

by Samuel Buchmann

They’re never interesting, involve themselves in everything, always miss the point and are never open for discussion. I’m talking, of course, about social media bots. Why do they even exist?

Social media bots are as unnecessary as diarrhoea. And they make me mad. They first annoyed me on Twitter over ten years ago. Later they moved on to Soundcloud, and now they’re on Instagram.

I’m aware that their uses often range from light misuse to election fraud. Thankfully, I rarely encounter these kinds of bots in my daily life, as I’ve made it a principle of mine to steer well clear of any political discussions on Twitter or Facebook. Even without bots, they’re irritating enough.

What annoys me is a seemingly much more innocuous type of bot. I’ll try to explain what they are and why they really get on my nerves.

As with most Hollywood movies, there are also good and bad bots. Telling them apart is extremely easy. The good ones are the ones that make it apparent that they are bots. The bad ones are those that pretend that they’re human.

The good bots are, while rarely useful, sometimes rather funny. For example, headline bots such as this one. They randomly generate the craziest headlines to current affairs. Another valiant worker is Tourette Bot. Not the most family-friendly of all accounts, but it still belongs on the good side. Everyone can see that it’s just a harmless bot. After all, it’s his job to delight the world with such poetry as Bastardassholesuckingballspussy, Fuckshitwhorefuckdick or Goldmansachs. This he does without fault.

Another group of easily recognisable bots are service bots. «Threat Level» can quickly inform you of whichever danger is currently relevant. The Moth Generator doesn’t just regularly come up with new species of moths, he also generates a picture. The bot has already created almost 10,000 moths, nothing to scoff at.

Evil bots don’t offer any service. Instead, they imitate the behaviour and appearance of real people. Usually, these are young attractive women. This is why I call them «bots with boobs».

There are of course good bots with boobs, such as Miquela and her friend Bermuda, for example. They do write that they’re bots in their bio. And by looking at them, you can easily tell.

Evil bots have nothing to do with art projects as above. They obscure the fact that they’re bots. Of course, their disguises are often very lacklustre. These bots can still trick you, however, by faking numbers. Using them you can easily inflate like and follower counts. You’d recognise the lone bot, but most of the time you only pay attention to the total numbers. These evil robots appear in huge numbers and multiply like bacteria under the sun.

Where it gets really devious is when real and bot accounts get mixed together. A real human is behind these accounts, but they’re assisted by algorithms. These part-time bots are harder to recognise.

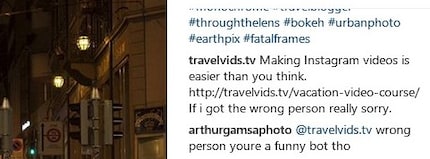

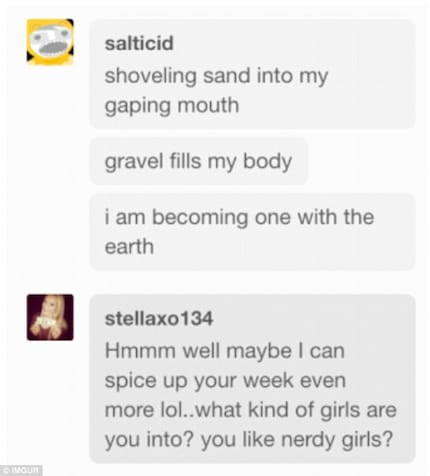

The behaviour of bots or half-bots can look like this, for example:

What the algorithms do is dependent on what best improves their numbers. Whenever one tactic has been busted, they change to something new.

Is faking numbers such a bad thing? I don’t really care if an influencer has 30,000 or 300,000 followers. On the other hand, these numbers influence which posts are promoted to the top of the feed or are even seen at all. This already bugs me more.

Superficially, it’s not that bad that I’m following a person just because an algorithm decided they were going to follow me. In the end, however, this trickery is the reason why we don’t really take serious, let alone trust communication on social media. Bot spamming isn’t the only but an important reason why we’re so numb towards the tidal flood of daily social media activity.

Wasn’t this the point and promise of social media after all? Allowing people from all over the world to communicate without barriers, reservations or social pressure?

Every type of dishonest communication destroys our trust. Communication is based on cooperation. Bots are sabotaging this principle. They feign interest so that you «interest them back». These bots have no feelings while playing with the feelings of others.

Where this really irritates me is with things I’ve really put a lot of effort into. For example, I used to upload self-composed synthesiser pieces to Souncloud for a while. Hours, often days of work were behind such a post. I sacrificed my free time for this. This entailed listening to a piece a thousand times to improve the intro or the mix and a further ten times the next day during my morning coffee to correct any mistakes I might have missed the previous evening.

If that much passion goes into it, I want to know what others think of it. Instead of constructive feedback, there was a hail of «likes» from artificial breasts that have never even heard my music. Comments that were so vague, they could have applied to basically any other song. All because some people think they can use spam bots to become famous.

The thing I find the craziest is this: the moral question is never discussed. As is briefly mentioned in an article titled Should you use Instagram Bots:

Instagram bots are a bit like eating an entire chocolate cake. It might sound like the perfect decision at the time, but deep down inside, you know that it’s so bad for you! This is exactly what you have to worry about when it comes to Instagram bots. They seem like the perfect solution for a busy entrepreneur who has little to no time to spend building their following on Instagram, but here’s the risk: any sort of automation on Instagram strictly violates the platform’s terms of use and using Instagram bots can get your account banned or shadowbanned!

The author doesn’t see the problem as people getting betrayed. Only that she could get punished.

The other problem that is mentioned: even with the most general comments, bots can miss the target so clearly that they get found out. For example: someone posts a picture implying that their dog has died. A comment such as «Great!» fits almost everywhere. Not here. In other words: if bots were better at tricking people, all would be fine.

This vulture-like marketing behaviour is annoying. Piss off, Fuckingfuckerfucksfuckingfuckerbots. You are the disease that plagues the 21s century.

Technologically, it should be quite easy to restrict bots. After all, they can only exist because the platform they’re on offers users an API, a sort of programming interface. The options to automatically like, comment and follow are offered by Facebook, Twitter, etc. themselves.

At the same time, people always mention that bots go against a platform’s guidelines. What is the truth? And why should a social media platform offer an API for something that goes against their guidelines?

According to Facebook’s terms of service, dated March 2019, banned practices include:

There are further guidelines for the API. Raising an entire bot army definitely breaks these rules. The question is not if they’re allowed or not, but if they’re tolerated. Or rather, how systematically Facebook & Co. want to fight them. This is the crucial point. Bot accounts do get banned with their traces erased, but nothing is done to prevent new ones.

How come bots rarely face consequences? I have two explanations.

On the one hand, bots aren’t even that bad for the service companies. Facebook has been touting their user numbers for years. Of course, a good part of that isn’t really sentient. This way, the company can show off more reach, activity and growth than is actually present.

On the other hand, consequences are automated, based on machine learning. Actual humans could never hope to brave the sheer number of accounts and expect to be fairly compensated. Alas, the banning algorithms are far from perfect. If this virtual police is programmed too rigidly, it’ll start banning real people. This is already happening and can be very irritating to the victims. In the end, this is worse than two or three bots escaping the ban hammer.

In short, social media companies don’t have any incentives to go after bots. Except if these machines gain so much power that they tarnish the companies’ image. That’s why they’re only doing what’s necessary.

My interest in IT and writing landed me in tech journalism early on (2000). I want to know how we can use technology without being used. Outside of the office, I’m a keen musician who makes up for lacking talent with excessive enthusiasm.

Interesting facts about products, behind-the-scenes looks at manufacturers and deep-dives on interesting people.

Show all